The research presented in this dissertation utilised a quasi-experimental design, involving a control (n = 15) and experimental group (n = 15), in order to answer the research question posed and consequently evaluate in empirical terms, the effect of a game-informed approach to assessment on academic achievement of Form 5 male students in Malta.

Video 6 Discussion of Findings [created by S. Bezzina]

(download reference pack here)

This section will discuss these research findings by interpreting and critically evaluating the data and results obtained from both the questionnaire and post-intervention test, in view of the present study and scholarly literature. The implications of the findings will be then assessed and explored on both a practical and theoretical dimension.

The use of digital technologies for assessment purposes

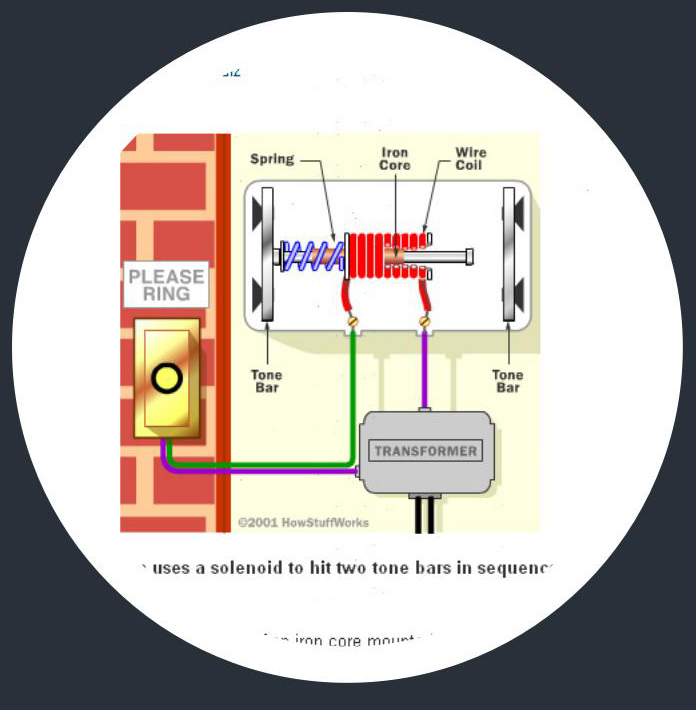

Figure 16 Digital Assessment Technologies [edited and adapted by S. Bezzina]

The initial results from the questionnaire point towards an extremely limited use of digital technologies in assessment procedures prior to the research, in both Physics and other subjects. The findings also confirm that all the students were at par in the previous use of digital assessment technologies and thus ethically, no participating group was at a disadvantage. Seventy-five percent of the technologies listed in the questionnaire (including wikis, clickers and web-based assessment systems), were never used by the students for assessment purposes in Physics. Since computer labs with internet access are available throughout the school, such finding may be likely to be related to the reluctance of traditional educators ‘to make substantial changes in assessment processes’ (Russell et al 2006, p 475) and adopt such technologies, in view of the ever-increasing institutional demands for reliability and validity (Knight 2001). No statistically significant difference was found between the 2 groups, for the frequency of use of interactive whiteboards and online quizzes in assessment during the past Physics lessons, which was however still limited from ‘weekly to never’. All the students indicated the use of clickers during assessment activities, yet again sporadically (from ‘every term to rarely’), in ICT lessons. This further reinforces the research design in terms of controlling over a possible extraneous variable (Robson 2011) and establishing equity between groups, as the use of digital technologies for assessment purposes represented an innovation for all participants. As such, any reactivity to the intervention from the participants, resulting from the awareness of being observed, known as Hawthorne effect (Hedges 2012), is to be equally attributed across both groups (Hedges 2012).

The effect of game-informed assessment on students’ academic achievement

Although the adjusted post-intervention mean score measuring academic achievement was higher for the experimental (51.5%) than the control group (45.3%), such variation represents a non-statistically significant difference in the students’ overall achievement (F(1,27) = 2.663, p > 0.05, partial η² = 0.090). This suggests that a game-informed approach to assessment has an equal overall effect to its traditional counterpart, on students’ academic achievement, operationally defined over the different cognitive processes set by Bloom’s taxonomy (Bloom 1956) and measured using a conventional objectively scored achievement test. To an extent, this is in contrast to the meta-analysis performed by Black and William (1998) and more recent empirical research (Araceli Ruiz-Primo and Furtak 2006, Wang 2007, Wiliam et al 2004, Wininger 2005), suggesting that students who are formatively assessed are more inclined to obtain higher scores on summative tests. However, as already discussed in the Review of Literature, such findings should be interpreted with caution in their insufficiency to conclusively demonstrate the effect of formative assessment on students’ academic achievement (Dunn and Mulvenon 2009). It is also worth noting, that even though the difference in means between the game-informed and traditional assessment groups is not statistically significant, the probability that such variation has occurred by chance rather than due to the educational intervention is low (11.4%). Furthermore, the effect size of the proportion of total variance associated with the game-informed approach rather than to other variables is medium (η² = 0.090) (Richardson 2011). These findings are even more encouraging given that the game-informed assessment group, having a traditional assessment background, managed to obtain a higher (although not statistically significant) overall score than students following a traditional assessment, in a traditional context and on a traditional achievement test. This lack of constructive alignment between teaching/learning activities and assessment (Biggs 2003) represented a further challenge for the experimental group.

Figure 21 The Effect of a Game-Informed Assessment on Students’ Academic Achievement (Freepik #13 n.d.)

However, on analysing the scores obtained in terms of the cognitive processes involved, the academic achievement of the experimental group was statistically significantly superior to that of the control group at the higher levels of the taxonomy (Bloom 1956). In fact, the traditional assessment group performance at the knowledge, comprehension and application levels matched that of the experimental group. This supports literature, which suggests that traditional modes of assessment work equally well at the lower cognitive levels (Biggs 1996, Ramsden 1997), while a game-informed approach becomes more effective at the more complex and abstract levels of the cumulative hierarchy represented by Bloom’s taxonomy (Bloom 1956). This also implies that traditional assessment, in the form of summative examinations, which is predominantly testing students at lower cognitive levels (Zheng et al 2008), requires adequate consideration and due diligence in preparing students at the higher levels of the cognitive domain. Such findings are in line with previous research, which reported an improved academic achievement at the higher levels of the cognitive domain for students who followed various formative assessment and learning strategies (Chang and Mao 1999, Crooks 1988, Tenenbaum 1986). In turn, the observed correlation may be explained in terms of the intrinsic metacognitive properties possessed by the game-informed intervention, during which the experimental group was continuously being exposed to metacognitive knowledge, along with the more traditional factual, conceptual and procedural types (Anderson and Krathwohl 2001). As such, operating metacognitive knowledge at the higher cognitive processes facilitates and promotes meaningful rather than rote learning (Mayer 2002).

The metacognitive skills practiced during the intervention by the game-informed assessment group, allowed the students to pay conscious awareness to the learning and assessment process (Pintrich 2002). This was achieved by creating a learning and assessment context that sustained multiple ‘feedback loops’ (Sadler 1989, p 120) and a cumulative approach to both coursework and learning (Hounsell et al 2007); exemplified in the 2-staged submission of practical work write-ups. Students’ self- and peer-assessment in the PeerWise activity allowed for an increased participation in assessment (Carless 2007) and provided greater access to the teacher’s and peers’ expertise and experience (Taras 2001). Student-generated assessment criteria and nominated test questions in the wiki exercise, together with the collaborative nature of the task, favoured self-regulation strategies (Nicol 2014, Pintrich 2002) and encouraged teacher and peer dialogue around learning (Nicol and Macfarlane-Dick 2006). Prompt and just-in-time feedback (Chickering and Gamson 1999) during the online multiple-choice questions on in2fiziks.com, the computer adaptive test on TokToL and offline quizzes using clickers facilitated the development of self-assessment and reflection (Nicol and Macfarlane-Dick 2006). Feedforward on these early low-stakes tasks contributed qualitatively to and fed directly into later work (Hounsell et al 2007), which resulted in a formal post-intervention test score measuring academic achievement. This constructive interplay between the formative and summative dimensions of assessment, in terms of an enhanced metacognitive activity, has significantly increased the quality of learning for the experimental group (Hounsell et al 2007). As a result, students who followed a game-informed assessment outperformed their peers in the control group on the post-intervention test, in breaking the problems posed into basic components, noting and explaining existing relationships between different parts so as to form a new complete whole and evaluating evidence through informed judgements (Bloom 1956).

Implications of Findings

As a result of the findings of this empirical study, a number of significant implications for all the stakeholders involved in assessment practices and policy arise. First and foremost, the students’ fundamental assumptions and core beliefs revolving around class-based assessment as distinct and at times conflicting with end-of-year summative tests, must change in favour of a more comprehensive and inclusive approach. The study confirms that the informal type of assessment, happening throughout the year inside the classroom, has a direct influence on students’ academic achievement. This holds even when the latter is measured via standardised high-stakes examinations, which albeit the various formative efforts in assessment along the scholastic year, continue to dominate the local research context (Grima and Chetcuti 2002). As such, adopting surface learning approaches throughout the year, including mere recall and reproduction of knowledge (Scouller 1998), may highly compromise one’s achievement on end-of-year tests, especially at the higher levels of the cognitive domain of learning (Bloom 1956). Similarly to gamers during gameplay, students should adopt a ‘lusory attitude’ (Suits 1978, p 40) towards learning, where effective voluntary participation in assessment practices involving metacognitive knowledge, leads to academic achievement.

Significant implications for educators adopting a game-informed approach to assessment in their daily teaching practices arise. First and foremost, the philosophy underpinning assessment as a means to solely certify students’ attainment (Boud and Falchikov 2006) might have to change in favour of and with greater emphasis on assessment for learning. For instance, teachers should not think that failure in assessment automatically equates to failure in learning. On the contrary, failure in assessment is essential to learning, as the ‘refinement of performance through replay and practice’ (Newman 2004, p 17) helps students, very much like players, to learn to fail better each time. Teachers, as well as curriculum designers and policy makers, should possibly reconsider ‘the balance between formative and summative assessment purposes’ (Knight 2001, p 25), in terms of the formative use of summative practices (Black et al 2003). In addition, and more importantly, the way assessment is designed and delivered in class, has an effect on how students think and learn. Learning gains at the analysis, synthesis and evaluation levels suggest that a game-informed approach to assessment has effectively stimulated and led students to perform better at higher and more complex cognitive levels. As in games, assessment for learning practices in the classroom must be ‘seamlessly woven into the fabric of the learning environment that they are virtually invisible’ (Shute et al 2009, p 299). The nature and approach to the assessment strategies being employed by the teacher, must be constructively aligned to the learning outcomes and teaching methods adopted (Biggs 2003). Such alignment is vital, as students tend to alter their innate learning strategies according to the context in which teaching, learning and assessment occur (Biggs 1989). Hence, a successful game-informed assessment environment fundamentally necessitates a congruent model, both for learning and teaching.

Go to the

Conclusions